One of the big projects for me this quarter was getting our Talos slaves configured as a pool of machines shared across branches. The details are being tracked in bug 488367 for those interested in the details.

This is a continuation of our work on pooling our slaves, like we've done over the past year with our build, unittest, and l10n slaves.

Up until now each branch has had a dedicated set of Mac Minis to run performance tests for just that branch, on five different operating systems. For example, the Firefox 3.0 branch used to have 19 Mac Minis doing regular Talos tests: 4 of each platform (except for Leopard, which had 3). Across our 4 active branches (Firefox 3.0, 3.5, 3.next, and TraceMonkey), we have around 80 minis in total! That's a lot of minis!

What we've been working towards is to put all the Talos slaves into one pool that is shared between all our active branches. Slaves will be given builds to test in FIFO order, regardless of which branch the build is produced on.

This new pool will be....

Faster

With more slaves available to all branches, the time to wait for a free slave will go down, so testing can start more quickly...which means you get your results sooner!

Smarter

It will be able to handle varying load between branches. If there's a lot of activity on one branch, like on the Firefox 3.5 branch before a release, then more slaves will be available to test those builds and won't be sitting idle waiting for builds from low activity branches.

Scalable

We will be able to scale our infrastructure much better using a pooled system. Similar to how moving to pooled build and unittest slaves has allowed us to scale based on number of checkins rather than number of branches, having pooled Talos slaves will allow us to scale our capacity based on number of builds produced rather than the number of branches.

In the current setup, each new release or project branch required an allocation of at least 15 minis to dedicate to the branch.

Once all our Talos slaves are pooled, we will be able to add Talos support for new project or release branches with a few configuration changes instead of waiting for new minis to be provisioned.

This means we can get up and running with new project branches much more quickly!

More Robust

We'll also be in a much better position in terms of maintenance of the machines. When a slave goes offline, the test coverage for any one branch won't be jeopardized since we'll still have the rest of the slaves that can test builds from that branch.

In the current setup, if one or two machines of the same platform needs maintenance on one branch, then our performance test coverage of that branch is significantly impacted. With only one or two machines remaining to run tests on that platform, it can be difficult to determine if a performance regression is caused by a code change, or is caused by some machine issue. Losing two or three machines in this scenario is enough to close the tree, since we no longer have reliable performance data.

With pooled slaves we would see a much more gradual decrease in coverage when machines go offline. It's the difference between losing one third of the machines on your branch, and losing one tenth.

When is all this going to happen?

Some of it has started already! We have a

small pool of slaves testing builds from our four branches right now. If you know how to coerce Tinderbox to show you hidden columns, you can take a look for yourself. They're also reporting to the

new graph server using machines names starting with 'talos-rev2'.

We have some new minis waiting to be added to the pool. Together with existing slaves, this will give us around 25 machines in total to start off the new pool. This isn't enough yet to be able to test every build from each branch without skipping any, so for the moment the pool will be skipping to the most recent build per branch if there's any backlog.

It's worth pointing out that our current Talos system also skips builds if there's any backlog. However, our goal is to turn off skipping once we have enough slaves in the pool to handle our peak loads comfortably.

After this initial batch is up and running, we'll be waiting for a suitable time to start moving the existing Talos slaves into the pool.

All in all, this should be a big win for everyone!

I've managed to harvest quite a few radishes already, and even some cilantro and basil! I'm a bit worried about the garlic...it looks a bit sickly lately. The onions look great though! I haven't had too many problems with pests lately. There are nibbles on some leaves of most plants, but nothing really major.

I love going out to check how the plants are growing every day. It looks like I'll be able to start harvesting some lettuce and swiss chard soon!

I've managed to harvest quite a few radishes already, and even some cilantro and basil! I'm a bit worried about the garlic...it looks a bit sickly lately. The onions look great though! I haven't had too many problems with pests lately. There are nibbles on some leaves of most plants, but nothing really major.

I love going out to check how the plants are growing every day. It looks like I'll be able to start harvesting some lettuce and swiss chard soon!

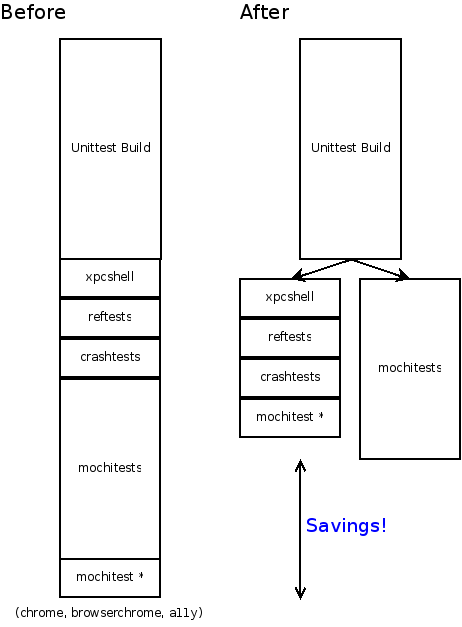

Splitting up the tests is a critical step towards reducing our end-to-end time, which is the total time elapsed between when a change is pushed into one of the source repositories, and when all of the results from that build are available. Up until now, you had to wait for all the test suites to be completed in sequence, which could take over an hour in total. Now that we can split the tests up, the wait time is determined by the longest test suite. The mochitest suite is currently the biggest chunk here, taking somewhere around 35 minutes to complete, and all of the other tests combined take around 20 minutes. One of the next steps for us to do is to look at splitting up the mochitests into smaller pieces.

For the time being, we will continue to run the existing unit tests on the same machine that is creating the build. This is so that we can make sure that running tests on the packaged builds is giving us the same results (there are already some known differences:

Splitting up the tests is a critical step towards reducing our end-to-end time, which is the total time elapsed between when a change is pushed into one of the source repositories, and when all of the results from that build are available. Up until now, you had to wait for all the test suites to be completed in sequence, which could take over an hour in total. Now that we can split the tests up, the wait time is determined by the longest test suite. The mochitest suite is currently the biggest chunk here, taking somewhere around 35 minutes to complete, and all of the other tests combined take around 20 minutes. One of the next steps for us to do is to look at splitting up the mochitests into smaller pieces.

For the time being, we will continue to run the existing unit tests on the same machine that is creating the build. This is so that we can make sure that running tests on the packaged builds is giving us the same results (there are already some known differences: