(caveat lector: this is a long post with lots of shell snippets and output; it's mostly a brain dump of what I did to investigate performance issues on hg.mozilla.org. I hope you find it useful. Scroll to the bottom for the summary.)

Everybody knows that pushing to try can be slow. but why?

while waiting for my push to try to complete, I wondered what exactly was slow.

I started by cloning my own version of try:

$ hg clone http://hg.mozilla.org try

destination directory: try

requesting all changes

adding changesets

adding manifests

adding file changes

added 95917 changesets with 447521 changes to 89564 files (+2446 heads)

updating to branch default

53650 files updated, 0 files merged, 0 files removed, 0 files unresolved

Next I instrumented hg so I could get some profile information:

$ sudo vi /usr/local/bin/hg

python -m cProfile -o /tmp/hg.profile /usr/bin/hg $*

Then I timed out long it took me to check what would be pushed:

$ time hg out ssh://localhost//home/catlee/mozilla/try

hg out ssh://localhost//home/catlee/mozilla/try 0.57s user 0.04s system 54% cpu 1.114 total

That's not too bad. Let's check our profile:

import pstats

pstats.Stats("/tmp/hg.profile").strip_dirs().sort_stats('time').print_stats(10)

Fri Dec 9 00:25:02 2011 /tmp/hg.profile

38744 function calls (37761 primitive calls) in 0.593 seconds

Ordered by: internal time

List reduced from 476 to 10 due to restriction

ncalls tottime percall cumtime percall filename:lineno(function)

13 0.462 0.036 0.462 0.036 {method 'readline' of 'file' objects}

1 0.039 0.039 0.039 0.039 {mercurial.parsers.parse_index2}

40 0.031 0.001 0.031 0.001 revlog.py:291(rev)

1 0.019 0.019 0.019 0.019 revlog.py:622(headrevs)

177/70 0.009 0.000 0.019 0.000 {__import__}

6326 0.004 0.000 0.006 0.000 cmdutil.py:15(parsealiases)

13 0.003 0.000 0.003 0.000 {method 'read' of 'file' objects}

93 0.002 0.000 0.008 0.000 cmdutil.py:18(findpossible)

7212 0.001 0.000 0.001 0.000 {method 'split' of 'str' objects}

392/313 0.001 0.000 0.007 0.000 demandimport.py:92(_demandimport)

The top item is readline() on file objects? I wonder if that's socket operations. I'm ssh'ing to localhost, so it's really fast. Let's add 100ms latency:

$ sudo tc qdisc add dev lo root handle 1:0 netem delay 100ms

$ time hg out ssh://localhost//home/catlee/mozilla/try

hg out ssh://localhost//home/catlee/mozilla/try 0.58s user 0.05s system 14% cpu 4.339 total

import pstats

pstats.Stats("/tmp/hg.profile").strip_dirs().sort_stats('time').print_stats(10)

Fri Dec 9 00:42:09 2011 /tmp/hg.profile

38744 function calls (37761 primitive calls) in 2.728 seconds

Ordered by: internal time

List reduced from 476 to 10 due to restriction

ncalls tottime percall cumtime percall filename:lineno(function)

13 2.583 0.199 2.583 0.199 {method 'readline' of 'file' objects}

1 0.054 0.054 0.054 0.054 {mercurial.parsers.parse_index2}

40 0.028 0.001 0.028 0.001 revlog.py:291(rev)

1 0.019 0.019 0.019 0.019 revlog.py:622(headrevs)

177/70 0.010 0.000 0.019 0.000 {__import__}

13 0.006 0.000 0.006 0.000 {method 'read' of 'file' objects}

6326 0.002 0.000 0.004 0.000 cmdutil.py:15(parsealiases)

93 0.002 0.000 0.006 0.000 cmdutil.py:18(findpossible)

392/313 0.002 0.000 0.008 0.000 demandimport.py:92(_demandimport)

7212 0.001 0.000 0.001 0.000 {method 'split' of 'str' objects}

Yep, definitely getting worse with more latency on the network connection.

Oh, and I'm using a recent version of hg:

$ hg --version

Mercurial Distributed SCM (version 2.0)

$ echo hello | ssh localhost hg -R /home/catlee/mozilla/try serve --stdio

145

capabilities: lookup changegroupsubset branchmap pushkey known getbundle unbundlehash batch stream unbundle=HG10GZ,HG10BZ,HG10UN httpheader=1024

This doesn't match what hg.mozilla.org is running:

$ echo hello | ssh hg.mozilla.org hg -R /mozilla-central serve --stdio

67

capabilities: unbundle lookup changegroupsubset branchmap stream=1

So it must be using an older version. Let's see what mercurial 1.6 does:

$ mkvirtualenv hg16

New python executable in hg16/bin/python

Installing setuptools...

(hg16)$ pip install mercurial==1.6

Downloading/unpacking mercurial==1.6

Downloading mercurial-1.6.tar.gz (2.2Mb): 2.2Mb downloaded

...

(hg16)$ hg --version

Mercurial Distributed SCM (version 1.6)

(hg16)$ echo hello | ssh localhost /home/catlee/.virtualenvs/hg16/bin/hg -R /home/catlee/mozilla/mozilla-central serve --stdio

75

capabilities: unbundle lookup changegroupsubset branchmap pushkey stream=1

That looks pretty close to what hg.mozilla.org claims it supports, so let's time 'hg out' again:

(hg16)$ time hg out ssh://localhost//home/catlee/mozilla/try

hg out ssh://localhost//home/catlee/mozilla/try 0.73s user 0.04s system 3% cpu 24.278 total

tl;dr

Finding missing changesets between two local repositories is

6x slower with hg 1.6 (4 seconds with hg 2.0 to 24 seconds hg 1.6). Add a few hundred people and machines hitting the same repository at the same time, and I imagine things can get bad pretty quickly.

Some further searching reveals that mercurial does support a

faster method of finding missing changesets in "newer" versions, although I can't figure out exactly when this change was introduced. There's already a

bug on file for upgrading mercurial on hg.mozilla.org, so hopefully that improves the situation for pushes to try.

The tools we use everyday aren't magical; they're subject to normal debugging and profiling techniques. If a tool you're using is holding you back, find out why!

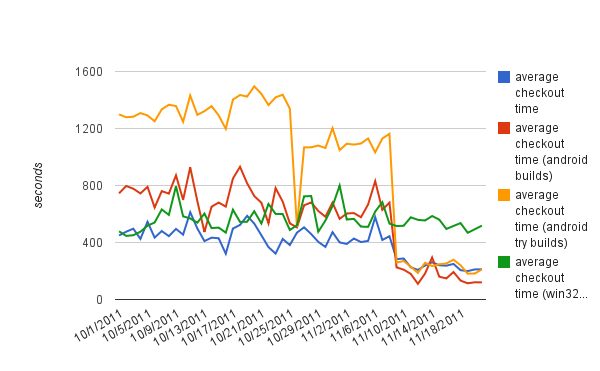

(click through for interactive goodness)

This is a report of how much time people have racked up on try server over the last 7 days. This isn't meant to be a wall of shame (or fame...). I hope that by publishing this people realize in some ways the costs their try pushes have on the infrastructure. This cost impacts not only yourself, but other people waiting for results on other branches.

If you really need those results from try, then by all means use it, it's there for you!

Please keep in mind though, if you have the results you need from try already, cancelling the remaining jobs is just a click away on tbpl.

(click through for interactive goodness)

This is a report of how much time people have racked up on try server over the last 7 days. This isn't meant to be a wall of shame (or fame...). I hope that by publishing this people realize in some ways the costs their try pushes have on the infrastructure. This cost impacts not only yourself, but other people waiting for results on other branches.

If you really need those results from try, then by all means use it, it's there for you!

Please keep in mind though, if you have the results you need from try already, cancelling the remaining jobs is just a click away on tbpl.