Packing bits with Rust & Ruby

The missing C of CHD

One element of the CHD (compress-hash-displace) algorithm that I didn't implement in my previous post was the "compress" part.

This algorithm generates an auxiliary table of seeds that are used to prevent hash collisions in the data set. These

seeds need to be encoded somehow and transmitted along with the rest of the data in order to perform lookups later on.

The number of seeds (called r in the algorithm) here is usually proportional to the number of elements in the input.

Having a larger r means that it's easier to compute seeds that avoid collisions, and therefore faster to compute the

perfect hash. Reducing r results in a more compact data structure at the expense of more compute up-front.

Packing seeds

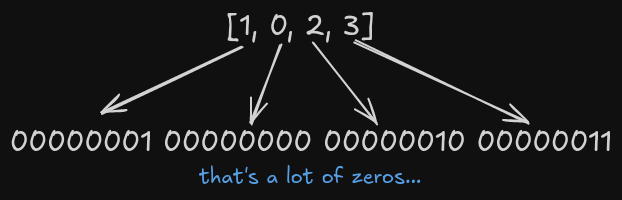

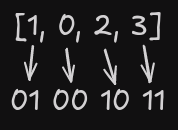

Seeds are generally tried starting from 0, and typically don't end up being very large. Encoding these values as a basic array of 8/16/32-bit integers is a waste of space.

I wanted to improve on my implementation of efficient encoding of hashes by doing some simple bit packing of the seeds.

The basic idea is that for a set of integers, we find the maximum value, and therefore the maximum number of bits (b) needed

to represent that value. We can then encode all the integers using b bits instead of a fixed number of bits.

There's a Rust crate bitpacking that does exactly this! And it runs super

duper fast, assuming that you can arrange your data into

groups of 32/128/256 integers. The API is really simple to use as well:

use bitpacking::{BitPacker, BitPacker4x}; fn main() { let data: Vec<u32> = (0..128).map(|i| i % 8).collect(); let packer = BitPacker4x::new(); let num_bits = packer.num_bits(&data); let mut compressed = vec![0u8; 4 * BitPacker4x::BLOCK_LEN]; let len = packer.compress(&data, compressed.as_mut_slice(), num_bits); compressed.truncate(len); println!("Compressed data: {:?}", compressed); }

Bridging the gap between Rust & Ruby

I wanted to use this from Ruby code though...time to bust out magnus!

Magnus is a crate which makes it really easy to write Ruby extensions using Rust. It takes care of most of the heavy lifting of converting to/from Ruby & Rust types.

#[magnus::wrap(class="BitPacking::BitPacker4x")] struct BitPacker4x(bitpacking::BitPacker4x) impl BitPacker4x { // ... fn compress( ruby: &Ruby, rb_self: &Self, decompressed: Vec<u32>, num_bits: u8, ) -> RString { let mut compressed = vec![0u8; 4 * Self::BLOCK_LEN]; let len = rb_self.0 // refers to underlying bitpacking::BitPacker4x struct .compress(&decompressed, compressed.as_mut_slice(), num_bits); compressed.truncate(len); ruby.str_from_slice(compressed.as_slice()) } }

This lets me write Ruby code like this:

data = 128.times.map { |i| i % 8 } packer = BitPacking::BitPacker4x.new num_bits = packer.num_bits(data) compressed = packer.compress(data, num_bits)

Here we have these 128 integers represented in 48 bytes, or 3 bits per integer.

BitPacking gem

I've packaged this up into the bitpacking gem.

I hope you find this useful!