Buildbot is a critical part of our build infrastructure at Mozilla. We use it to manage builds on 5 different platforms (Linux, Mac, Windows, Maemo and Windows Mobile), and 5 different branches (mozilla-1.9.1, mozilla-central, TraceMonkey, Electrolysis, and Places). All in all we have 80 machines doing builds across 150 different build types (not counting Talos; all the Talos test runs and slaves are managed by a different master).

And buildbot is at the center of it all.

The load on our machine running buildbot is normally fairly high, and occasionally spikes so that the buildbot process is unresponsive. It normally restores itself within a few minutes, but I'd really like to know why it's doing this!

Running our staging buildbot master with python's

cProfile module for almost two hours yields the following profile:

ncalls tottime percall cumtime percall filename:lineno(function)

416377 4771.188 0.011 4796.749 0.012 {select.select}

52 526.891 10.133 651.043 12.520 /tools/buildbot/lib/python2.5/site-packages/buildbot-0.7.10p1-py2.5.egg/buildbot/status/web/waterfall.py:834(phase2)

6518 355.370 0.055 355.370 0.055 {posix.fsync}

232582 238.943 0.001 1112.039 0.005 /tools/twisted-8.0.1/lib/python2.5/site-packages/twisted/spread/banana.py:150(dataReceived)

10089681 104.395 0.000 130.089 0.000 /tools/twisted-8.0.1/lib/python2.5/site-packages/twisted/spread/banana.py:36(b1282int)

36798140/36797962 83.536 0.000 83.537 0.000 {len}

29913653 70.458 0.000 70.458 0.000 {method 'append' of 'list' objects}

311 63.775 0.205 63.775 0.205 {bz2.compress}

10088987 56.581 0.000 665.982 0.000 /tools/twisted-8.0.1/lib/python2.5/site-packages/twisted/spread/banana.py:141(gotItem)

4010792/1014652 56.079 0.000 176.693 0.000 /tools/twisted-8.0.1/lib/python2.5/site-packages/twisted/spread/jelly.py:639(unjelly)

2343910/512709 47.954 0.000 112.446 0.000 /tools/twisted-8.0.1/lib/python2.5/site-packages/twisted/spread/banana.py:281(_encode)

Interpreting the results

select shows up in the profile because we're profiling wall clock time, not cpu time. So the more time we're spending in

select, the better, since that means we're just waiting for data. The overall run time for this profile was 7,532 seconds, so

select is taking around 63% of our total time. I believe the more time spent here, the better. Time spent inside

select is idle time.

We already knew that the buildbot waterfall was slow (the second line in profile).

fsync isn't too surprising either. buildbot calls

fsync after writing log files to disk. We've considered removing this call, and this profile lends support to our original guess.

The next entries really surprised me, twisted's

dataReceived and a decoding function,

b1282int. These are called when processing data received from the slaves. If I'm reading this correctly, this means that

dataReceived and children account for around 40% of our total time after you remove the time spent in

select.

1112 / (7532-4796) = 40%.

These results are from our staging buildbot master, which doesn't have anywhere near the same load as the production buildbot master. I would expect that the time spent waiting in

select would go down on the production master (there's more data being received, more often), and that time spent in

fsync and

dataReceived would go up.

What to do about it?

A few ideas....

- Use psyco to get some JIT goodness applied to some of the slower python functions.

- Remove the

fsync call after saving logs.

- Use the cpu-time to profile rather than wallclock time. This will give a different perspective on the performance of buildbot, which should give better information about where we're spending time processing data.

- Implement slow pieces in C (or cython). Twisted's Banana library looks do-able in C, and also is high up in the profile.

- Send less data from the slaves. We're currently logging all stdout/stderr produced by the slaves. All of this data is processed by the master process and then saved to disk.

- Rearchitect buildbot to handle this kind of load.

- Have more than one buildbot master, each one handling fewer slaves. We're actively looking into this approach, since it also allows us to have some redundancy for this critical piece of our infrastructure.

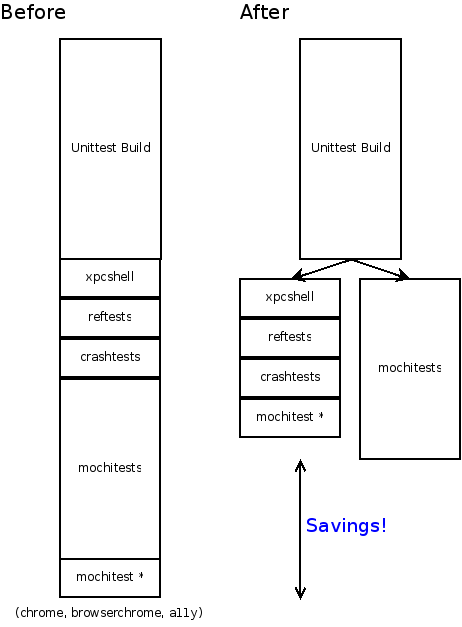

Splitting up the tests is a critical step towards reducing our end-to-end time, which is the total time elapsed between when a change is pushed into one of the source repositories, and when all of the results from that build are available. Up until now, you had to wait for all the test suites to be completed in sequence, which could take over an hour in total. Now that we can split the tests up, the wait time is determined by the longest test suite. The mochitest suite is currently the biggest chunk here, taking somewhere around 35 minutes to complete, and all of the other tests combined take around 20 minutes. One of the next steps for us to do is to look at splitting up the mochitests into smaller pieces.

For the time being, we will continue to run the existing unit tests on the same machine that is creating the build. This is so that we can make sure that running tests on the packaged builds is giving us the same results (there are already some known differences:

Splitting up the tests is a critical step towards reducing our end-to-end time, which is the total time elapsed between when a change is pushed into one of the source repositories, and when all of the results from that build are available. Up until now, you had to wait for all the test suites to be completed in sequence, which could take over an hour in total. Now that we can split the tests up, the wait time is determined by the longest test suite. The mochitest suite is currently the biggest chunk here, taking somewhere around 35 minutes to complete, and all of the other tests combined take around 20 minutes. One of the next steps for us to do is to look at splitting up the mochitests into smaller pieces.

For the time being, we will continue to run the existing unit tests on the same machine that is creating the build. This is so that we can make sure that running tests on the packaged builds is giving us the same results (there are already some known differences: