Gotta Cache 'Em All

TOO MUCH TRAFFIC!!!!

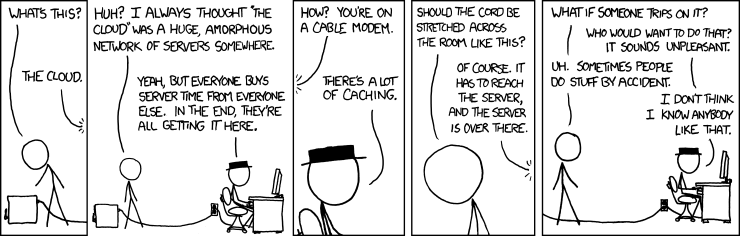

Waaaaaaay back in February we identified overall network bandwidth as a cause of job failures on TBPL. We were pushing too much traffic over our VPN link between Mozilla's datacentre and AWS. Since then we've been working on a few approaches to cope with the increased traffic while at the same time reducing our overall network load. Most recently we've deployed HTTP caches inside each AWS region.

The answer - cache all the things!

Caching build artifacts

The primary target for caching was downloads of build/test/symbol packages by test machines from file servers. These packages are generated by the build machines and uploaded to various file servers. The same packages are then downloaded many times by different machines running tests. This was a perfect candidate for caching, since the same files were being requested by many different hosts in a relatively short timespan.

Caching tooltool downloads

Tooltool is a simple system RelEng uses to distribute static assets to build/test machines. While the machines do maintain a local cache of files, the caches are often empty because the machines are newly created in AWS. Having the files in local HTTP caches speeds up transfer times and decreases network load.

Results so far - 50% decrease in bandwidth

Initial deployment was completed on August 8th (end of week 32 of 2014). You can see by the graph above that we've cut our bandwidth by about 50%!

What's next?

There are a few more low hanging fruit for caching. We have internal pypi repositories that could benefit from caches. There's a long tail of other miscellaneous downloads that could be cached as well.

There are other improvements we can make to reduce bandwidth as well, such as moving uploads from build machines to be outside the VPN tunnel, or perhaps to S3 directly. Additionally, a big source of network traffic is doing signing of various packages (gpg signatures, MAR files, etc.). We're looking at ways to do that more efficiently. I'd love to investigate more efficient ways of compressing or transferring build artifacts overall; there is a ton of duplication between the build and test packages between different platforms and even between different pushes.

I want to know MOAR!

Great! As always, all our work has been tracked in a bug, and worked out in the open. The bug for this project is 1017759. The source code lives in https://github.com/mozilla/build-proxxy/, and we have some basic documentation available on our wiki. If this kind of work excites you, we're hiring!

Big thanks to George Miroshnykov for his work on developing proxxy.