RelEng had a great start to

2015. We hit some major milestones on projects like Balrog and were able to turn

off some old legacy systems, which is always an extremely satisfying thing to do!

We also made some exciting new changes to the underlying infrastructure, got

some projects off the drawing board and into production, and drastically

reduced our test load!

Firefox updates

All Firefox update queries are now being served by Balrog! Earlier this year,

we switched all Firefox update queries off of the old update server,

aus3.mozilla.org, to the new update server, codenamed

Balrog.

Already, Balrog has enabled us to be much more flexible in handling updates

than the previous system. As an example, in bug

1150021, the About

Firefox dialog was broken in the Beta version of Firefox 38 for users with RTL

locales. Once the problem was discovered, we were able to quickly disable

updates just for those users until a fix was ready. With the previous system it

would have taken many hours of specialized manual work to disable the updates

for just these locales, and to make sure they didn't get updates for subsequent

Betas.

Once we were confident that Balrog was able to handle all previous traffic, we

shut down the old update server (aus3).

aus3 was also one of the last systems relying on CVS (!! I know, rite?). It's a

great feeling to be one step closer to axing one more old system!

When we started the quarter, we had an exciting new plan for generating partial

updates for Firefox in a scalable way.

Then we threw out that plan and came up with an EVEN MOAR BETTER plan!

The new architecture

for funsize relies on Pulse for notifications

about new nightly builds

that need partial updates, and uses TaskCluster

for doing the generation of the partials and publishing to Balrog.

The current status of funsize is that we're using it to generate partial

updates for nightly builds,

but not published to the regular nightly update channel yet.

There's lots more to say here...stay tuned!

FTP & S3

Brace yourselves... ftp.mozilla.org

is going away...

...in its current incarnation at least.

Expect to hear MUCH more about this in the coming months.

tl;dr is that we're migrating as much of the Firefox build/test/release

automation to S3 as possible.

The existing machinery behind ftp.mozilla.org will be going away near the end of Q3. We

have some ideas of how we're going to handle migrating existing content, as

well as handling new content. You should expect that you'll still be able to

access nightly and CI Firefox builds, but you may need to adjust your scripts

or links to do so.

Currently we have most builds

and tests

doing their transfers to/from S3 via the task cluster index in

addition to doing parallel uploads to ftp.mozilla.org. We're aiming to shut off

most uploads to ftp this quarter.

Please let us know if you have particular systems or use cases that rely on the

current host or directory structure!

Our new Firefox release

pipeline got off the

drawing board, and the initial proof-of-concept work is done.

The main idea here is to take an existing build based on a push to

mozilla-beta, and to "promote" it to a release build. So we need to generate

all the l10n repacks, partner repacks, generate partial updates, publish files

to CDNs, etc.

The big win here is that it cuts our time-to-release nearly in half, and also

simplifies our codebase quite a bit!

Again, expect to hear more about this in the coming months.

Infrastructure

In addition to all those projects in development, we also tackled quite a few

important infrastructure projects.

10.10 is now the most widely used Mac platform for Firefox, and it's important

to test what our users are running. We performed a rolling upgrade

of our OS X testing environment, migrating from 10.8 to 10.10 while spending

nearly zero capital, and with no downtime. We worked jointly with the Sheriffs

and A-Team to green up all the tests, and shut coverage off on the old platform

as we brought it up on the new one. We have a few 10.8 machines left riding the

trains that will join our 10.10 pool with the release of ESR 38.1.

Got Windows builds in AWS

We saw the first successful builds of Firefox for Windows in

AWS

this quarter as well! This paves the way for greater flexibility, on-demand

burst capacity, faster developer prototyping, and disaster recovery and

resiliency for windows Firefox builds. We'll be working on making these

virtualized instances more performant and being able to do large-scale

automation before we roll them out into production.

Puppet on windows

RelEng uses puppet to manage our Linux and OS X

infrastructure. Presently, we use a very different tool chain, Active Directory

and Group Policy Object, to manage our Windows infrastructure. This quarter we

deployed a prototype Windows build machine which is managed with puppet

instead. Our goal here is to increase visibility and hackability of our Windows

infrastructure. A common deployment tool will also make it easier for RelEng

and community to deploy new tools to our Windows machines.

We've redesigned and

deployed a new version

of tooltool, the

content-addressable store for large binary files used in build and test jobs.

Tooltool is now integrated with RelengAPI and uses S3 as a backing store. This

gives us scalability and a more flexible permissioning model that, in addition

to serving public files, will allow the same access outside the releng network

as inside. That means that developers as well as external automation like

TaskCluster can use the service just like Buildbot jobs. The new

implementation also boasts a much simpler HTTP-based upload mechanism that will

enable easier use of the service.

Centralized POSIX System Logging

Using syslogd/rsyslogd and Papertrail, we've set

up centralized system logging for all our POSIX infrastructure. Now that all

our system logs are going to one location and we can see trends across multiple

machines, we've been able to quickly identify and fix a number of previously

hard-to-discover bugs. We're planning on adding additional logs (like Windows

system logs) so we can do even greater correlation. We're also in the process

of adding more automated detection and notification of some easily recognizable

problems.

Security work

Q1 included some significant effort to avoid serious security exploits like

GHOST, escalation of privilege bugs in the Linux kernel, etc. We manage 14

different operating systems, some of which are fairly esoteric and/or no longer

supported by the vendor, and we worked to backport some code and patches to

some platforms while upgrading others entirely. Because of the way our

infrastructure is architected, we were able to do this with minimal downtime or

impact to developers.

API to manage AWS workers

As part of our ongoing effort to automate the loaning of releng

machines when required, we created an API layer to

facilitate the creation and loan of AWS resources, which was previously, and

perhaps ironically, one of the bigger time-sinks for buildduty when loaning

machines.

Release engineering is in the process of migrating from our stalwart,

buildbot-driven infrastructure, to a newer, more purpose-built solution in

taskcluster. Many FirefoxOS jobs have

already migrated, but those all conveniently run on Linux. In order to support

the entire range of release engineering jobs, we need support for Mac and

Windows as well. In Q1, we created what we call a "generic worker," essentially

a base class that allows us to extend taskcluster job support to non-Linux

operating systems.

Testing

Last, but not least, we deployed initial support for

SETA,

the search for extraneous test automation!

This means we've stopped running all tests on all builds. Instead, we use

historical data to determine which tests to run that have been catching the

most regressions. Other tests are run less frequently.

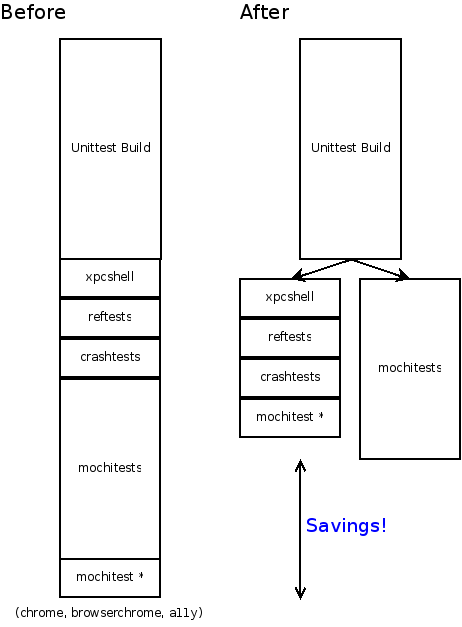

Splitting up the tests is a critical step towards reducing our end-to-end time, which is the total time elapsed between when a change is pushed into one of the source repositories, and when all of the results from that build are available. Up until now, you had to wait for all the test suites to be completed in sequence, which could take over an hour in total. Now that we can split the tests up, the wait time is determined by the longest test suite. The mochitest suite is currently the biggest chunk here, taking somewhere around 35 minutes to complete, and all of the other tests combined take around 20 minutes. One of the next steps for us to do is to look at splitting up the mochitests into smaller pieces.

For the time being, we will continue to run the existing unit tests on the same machine that is creating the build. This is so that we can make sure that running tests on the packaged builds is giving us the same results (there are already some known differences:

Splitting up the tests is a critical step towards reducing our end-to-end time, which is the total time elapsed between when a change is pushed into one of the source repositories, and when all of the results from that build are available. Up until now, you had to wait for all the test suites to be completed in sequence, which could take over an hour in total. Now that we can split the tests up, the wait time is determined by the longest test suite. The mochitest suite is currently the biggest chunk here, taking somewhere around 35 minutes to complete, and all of the other tests combined take around 20 minutes. One of the next steps for us to do is to look at splitting up the mochitests into smaller pieces.

For the time being, we will continue to run the existing unit tests on the same machine that is creating the build. This is so that we can make sure that running tests on the packaged builds is giving us the same results (there are already some known differences: